Scientists Model Turbulence at Atomic Level

Scientists at HSE University and MIPT have developed a supercomputer-based method to model fluid flows at atomistic scales making it possible to describe the emergence of turbulence. The researchers used the supercomputers cHARISMa and Desmos to compute the flow of a fluid consisting of several hundred million atoms. This method is already being used to simulate the flow of liquid-metal lead coolant in a nuclear reactor. The paper has been published in The International Journal of High Performance Computing Applications.

In computer modelling, fluid is typically defined as a non-discrete medium, and its flow is described by a set of the Navier-Stokes differential equations solved numerically. Such models are referred to as continuum models, and they do not account for the behaviour of individual atoms and molecules within a fluid. However, scientists dealing with applied problems are often interested less in tranquil, laminar flows and more in turbulent flows, where fluids generate vortices of varying sizes that evolve stochastically over time and space. In the 1940s, the Soviet mathematician and academician Andrei Kolmogorov formulated a theory describing the evolution of vortices in turbulent flows which demonstrates that larger vortices dissipate into smaller ones, down to scales of tens and hundreds of nanometres. At these sizes, known as Kolmogorov microscales, continuum methods are ineffective; instead, they require the modelling of individual atoms and molecules by numerically solving their equations of motion. Going from a continuum description to a discrete description can be particularly helpful in certain cases, such as the study of diffusion and the formation of particle clusters in turbulent flows. While these processes can be described in a continuum approximation, the accuracy of assumptions can only be validated through atomistic modelling.

To investigate the formation of turbulence, scientists at HSE University and MIPT have developed a concept making it possible to observe rapid fluid flow around obstacles at micrometre scales. The team devised a method to confine the fluid flow to a limited space and subsequently implemented it in two molecular modelling programs. Additionally, the researchers analysed the performance of the supercomputers used for these calculations and explored ways to optimise it.

'We have obtained a natural flow of fluid with vortices spontaneously arising from flowing around an obstacle at a scale of hundreds of millions of atoms; this has not been done before. The purpose of our new method is to gather data for specific scenarios, such as diffusion and flow near walls, and to accurately bridge the atomistic to continuum scales in areas of modelling where this connection is critically important,' comments Vladimir Stegailov, research team leader, Leading Research Fellow at the International Laboratory for Supercomputer Atomistic Modelling and Multi-scale Analysis of HSE University, and Head of the MIPT Laboratory of Supercomputer Methods in Condensed Matter Physics.

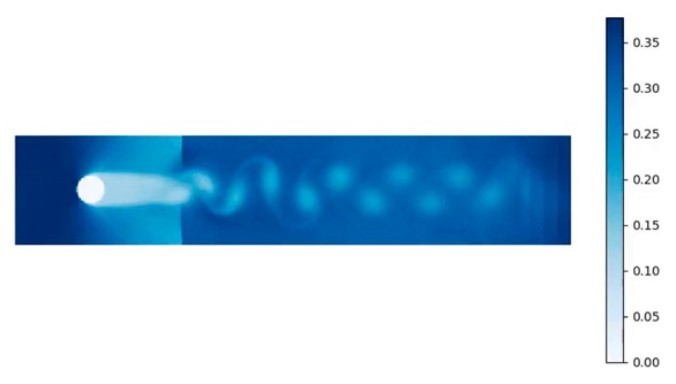

The modelled system consisted of a flat quasi-two-dimensional parallelepiped containing a cylindrical obstacle and several million to several hundred million atoms of fluid. A predetermined flow velocity was added to the thermal velocities of the atoms, and where it was sufficiently high, turbulent vortices spontaneously formed after bending around the cylinder. Thus, the scientists were able to simulate the formation of a pre-turbulent flow regime under natural conditions, without imposing any additional special conditions on the movement of the fluid.

The challenging aspect of the simulation was that the particles, during their movement, had to travel beyond the boundaries of the parallelepiped. Typically, in atomistic modelling, periodic boundary conditions are applied, meaning that atoms hypothetically leaving the system on the right side are artificially reintroduced into the system with the same speed and direction of movement on the left side in subsequent calculation steps. Thus, the system remains closed. From a computational perspective, this method is the most straightforward. In dealing with the vortex problem, the physicists had to devise periodic conditions to ensure that the flow would revert to a non-turbulent state when crossing the system's boundary. Otherwise, upon the atoms' return to the parallelepiped, the fluid would already be turbulent when encountering the obstacle, which contradicts the original formulation of the problem. The scientists suggested positioning virtual planes near the right boundary of the system. Upon crossing these planes, the particle velocity was recalculated, restoring the flow to a normal (laminar) state. This approach ensured that the return of atoms did not compromise the laminarity condition of the incoming flow.

Following a theoretical justification of the proposed boundary conditions, the scientists implemented them into widely used molecular modelling programs LAMMPS and OpenMM and performed fluid flow calculations using supercomputers equipped with graphics accelerators. The researchers placed particular emphasis on maximising computational performance, recognising that in systems which comprise millions of atoms and require the calculation of several million time steps, a millisecond acceleration at each computational step translates to savings of several days, or even weeks, in supercomputer operation.

Vladislav Galigerov

According to Vladislav Galigerov, master's student at HSE MIEM and one of the two primary co-authors of the paper, 'Tools for in-depth performance analysis are continuously evolving, including the Score-P tool for analysing performance data of parallel programs, which we used in this study. Developing standards for using such tools is essential to ensure that developers of programs for supercomputers, as they modify existing code or develop new ones, can conduct analyses according to these standards, allowing them to assess the effectiveness of their applications across various supercomputer architectures, including those to which they may not have direct access.'

The analysis for this paper was conducted using the Desmos supercomputer at the Joint Institute for High Temperatures of the Russian Academy of Sciences and the cHARISMa supercomputer at HSE University.

The video shows a lateral view of the simulation cell containing 230 million atoms. The colour represents the local density of particles, with bluer regions indicating higher particle density, while the lighter areas correspond to lower density. It can be observed that after flowing around the cylinder, a stochastic Kármán vortex street forms in the flow. Also visible on the right are the planes, beyond which particle velocities redistribute, leading to gradual laminarisation of the flow.

See also:

HSE Develops Its Own MLOps Platform

HSE researchers have developed an MLOps platform called SmartMLOps. It has been created for artificial intelligence researchers who wish to transform their invention into a fully-fledged service. In the future, the platform may host AI assistants to simplify educational processes, provide medical support, offer consultations, and solve a wide range of other tasks. Creators of AI technologies will be able to obtain a ready-to-use service within just a few hours. Utilising HSE’s supercomputer, the service can be launched in just a few clicks.

Megascience, AI, and Supercomputers: HSE Expands Cooperation with JINR

Experts in computer technology from HSE University and the Joint Institute for Nuclear Research (JINR) discussed collaboration and joint projects at a meeting held at the Meshcheryakov Laboratory of Information Technologies (MLIT). HSE University was represented by Lev Shchur, Head of the Laboratory for Computational Physics at the HSE Tikhonov Moscow Institute of Electronics and Mathematics (HSE MIEM), as well as Denis Derkach and Fedor Ratnikov from the Laboratory of Methods for Big Data Analysis at the HSE Faculty of Computer Science.

HSE cHARISMa Supercomputer Completes One Million Tasks

Since 2019, the cHARISMa supercomputer has been helping staff, teachers and students of HSE university to solve research tasks. In February 2023, it completed its millionth task—a computational experiment dedicated to studying the phenomenon of multiparticle localisation in quasi-one-dimensional quantum systems.

From Covid-19 to Risk Appetite: How HSE University’s Supercomputer Helps Researchers

Whether researching how the human brain works, identifying the source of COVID-19, running complex calculations or testing scientific hypotheses, supercomputers can help us solve the most complex tasks. One of the most powerful supercomputers in the CIS is cHARISMa, which is now in its third year of operation at HSE University. Pavel Kostenetskiy, Head of the HSE University Supercomputer Modeling Unit, talks about how the supercomputer works and what kind of projects it works on.

HSE Supercomputer Doubles Its Performance

The peak performance of the HSE cHARISMa supercomputer has doubled, reaching 2 petaflops (2 quadrillion floating-point operations per second). HSE University now outperforms the Kurchatov Institute in terms of computing power. The only more powerful university computers are MSU’s Lomonosov-2 and SPbPU’s Polytechnic. Thanks to the timely upgrade, cHARISMa has retained its respectable 6th position among the Top 50 most powerful computer systems in the CIS for three years.

Open-Source GPU Technology for Supercomputers: Researchers Navigate Advantages and Disadvantages

Researchers from the HSE International Laboratory for Supercomputer Atomistic Modelling and Multi-scale Analysis, JIHT RAS and MIPT have compared the performance of popular molecular modelling programs on GPU accelerators produced by AMD and Nvidia. In apaper published by the International Journal of High Performance Computing Applications, the scholars ported LAMMPS on the new open-source GPU technology, AMD HIP, for the first time.

HSE Supercomputer Is Named cHARISMa

In July this year, there was an open vote to name the HSE’s supercomputer. Two names - Corvus (‘crow’ in Latin; the crow is HSE's mascot) and cHARISMa (Computer of HSE for Artificial Intelligence and Supercomputer Modelling) – received the most votes. The latter won by a narrow margin, with 441 people (one in three of those who took part in the vote) choosing this name.

Supercomputer Set Up at HSE University

A new supercomputer, which has been recently set up at MIEM, will allow the university to carry out high quality research in deep learning and mathematical modeling. The computer was ranked sixth in the April Top-50 ranking of supercomputers in Russia.